Read these reflections from our first cohort of Perioperative Data Science summer interns.

Henry Spradlin:

The first thing I would like to do is thank Dr. Berkowitz for looking into the position, Dr. Melvin for offering me the position and helping me through my project, and Dr. Godwin for any other help I needed along the way. I quickly found one of the things I appreciated about this position was that everyone on the team is doing something that matters. During our daily check-in, Dr. Melvin and Dr. Godwin would mention the projects they were working on such as using AI with glucose monitoring or using image analysis on radiology images. When I initially met with Dr. Melvin, he asked me what I wanted to get out of this internship; I explained I wanted to improve my python skills. Machine learning is fascinating to me but as I am going into aerospace engineering, I figured a general knowledge of python would help me most in the future. Dr. Melvin started me right away with a project that improved my skills. The overarching goal of my project (in conjunction with Daniel Harrod) was to create a self-compiling database of past resident lectures for easy reference (nobody wants to watch 12 years of recordings for the two sentences of information they need). My part focused on taking a pre-transcribed lecture, summarizing that, and extracting the keywords. This fit my internship goal extremely well because it allowed me to practice writing a lot of more basic code engraining python syntax in my head as well as learning a few more advanced concepts.

Andrew Glassford:

I spent my internship working on the Citation Count Prediction project, where the goal is to create an AI model that can read a scholarly paper’s title, abstract, and metadata, and then (accurately) predict how many future papers would cite this one. Dr. Godwin leads the CCP project, so I would technically be under his supervision, although Dr. Melvin was always ready to give advice when needed.

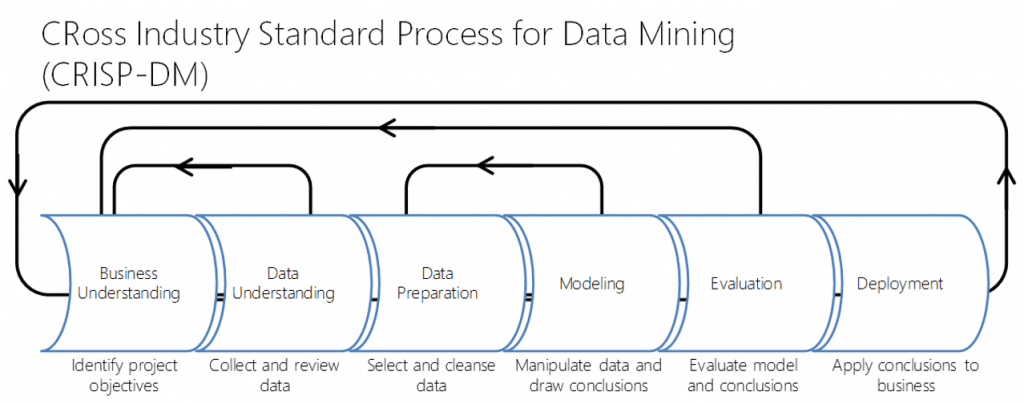

My first task was to recompile some of the test data that we were going to use. We wanted an extra datapoint, which meant altering and re-running the whole fetch-and-organize program. I was then tasked with finding a good model to read our data and make predictions. After experimenting with OpenAI’s GPT-3 and finding limited success, we opted for MATLAB’s text processing. After hybridizing NLP and MLP models built from scratch, we decided to replace the basic NLP with a pre-trained model called BERT. It produced the best results to date, with an overall accuracy of 65% and an AUC-ROC of 0.71.

I learned a surprisingly broad array of skills, from using MATLAB to how to work on a research team. Apparently, there’s a difference between machine learning and deep learning, and just because it’s called “deep” doesn’t mean it’s actually better. I was exposed to a whole new world of business applications, like Azure DevOps and RocketChat. I even learned about and got certified to handle protected medical data! And, of course, no internship would be complete without some great mentors to patiently explain why exactly I was entirely wrong, several times over. My biggest takeaway from this internship probably sounds silly, but it’s true: just keep trying stuff. When an idea didn’t work, we didn’t try to brute force some haphazard nonsense; we backed up, analyzed our results, and moved on to the next option. Good work advice, and good relationship advice, too. Overall, an excellent enrichment experience.

Daniel Harrod:

Coming soon!